Microsoft Copilot promises massive productivity gains but are organizations truly prepared to use it safely and effectively?

In a recent article, Matt Einig, CEO of Rencore, challenges the ROI claims made in the Forrester report The Total Economic Impact™ Of Microsoft 365 Copilot, warning that many organizations are rushing into AI adoption without the basic governance needed to manage the risks.

Our Key Takeaways from the Article

- A key assumption of the Forrester report is that the data Copilot is accessing is accurate with the right guardrails in place which isn’t always the case.

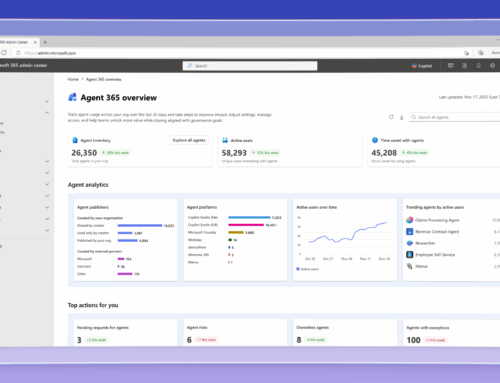

- Agent “sprawl” is real, and organizations are rapidly creating automations and AI-driven tools without policies or visibility into who owns, governs, or monitors them.

- ROI is misleading without considering the cost of rework, compliance remediation, or uncontrolled data access.

You can read the full article here: The ROI Illusion: Hidden Risks Behind Microsoft’s Copilot Productivity Claims

This article articulated a major pain point we’ve seen when it comes to implementing Microsoft 365 Copilot; businesses understand the value Copilot can bring to their employees but underestimate the work it will take to capitalize on that. Ensuring your Microsoft 365 environments are clean, controlled, and secure, and your users are prepared is a necessary first step for any AI initiative.

What AI Readiness Means for Document Governance

True AI readiness isn’t just about installing Copilot, it’s about ensuring your content environment is structured, governed, and secure. That includes:

1. Clean-Up Your Content

In working with our clients to implement Copilot, we’ve seen first-hand how much Redundant, Obsolete, and Trivial (ROT) content lurks beneath the surface, waiting to sabotage Copilot implementations. If you have ROT content or your content lacks tagging or classification, Copilot will struggle to surface the right information, or worse, surface the wrong one. Cleaning up ROT, applying metadata and labels, and reorganizing content structures is key to improving discoverability and the effectiveness of Copilot.

2. Access and Permissions Audit

Copilot operates within the bounds of your permissions but if those are messy, so are your results. Performing an audit of your sensitivity labels, DLP policies, and permission settings to ensure data security and compliance is critical. We encourage our clients to utilize Microsoft Purview to secure and govern data before implementing Copilot.

3. Automation and Lifecycle Oversight

Just like we saw with the growth of Power Platform and citizen developers, governance is crucial as AI agents are created and begin automating workflows and summarizing documents. To prevent sprawl, we recommend putting systems in place to provide visibility into what agents exist and who created them, set up approval workflows for new automations, and configuring policies for reviewing, expiring, or retiring unused agents.

4. User Education and Enablement

Copilot is a powerful tool, but it’s only as smart as your users. Providing end-user training, developing enablement strategies, and implementing governance frameworks that adapt to rapid innovation will help foster adoption and set you up for sustainable growth.

Are You Ready for Copilot?

AI doesn’t fix disorganized content, it magnifies it. If your content is poorly governed, your Copilot experience will reflect that with confused responses, exposed data, and a false sense of productivity. To get the most out of Copilot, investing in AI-readiness will help you experience the ROI that AI has promised.

Partner with Compass365 to prepare your Microsoft 365 environments for successful Copilot adoption. We help organizations assess their current environment, optimize content, and implement the governance structures needed for secure, scalable AI adoption.

Contact us to talk about your Copilot readiness and content governance strategy.