Ingrid Camill discusses the importance of thorough testing in implementing SharePoint and Microsoft 365 solutions, drawing from her 20 years of experience.

- Necessity of Testing: Testing is essential to confirm that the system meets technical specifications and business needs, preventing post-deployment issues.

- Challenges of Testing: Testing is labor-intensive and requires time and resources, but skipping it can lead to significant problems.

- Common Issues from Poor Testing: Examples of issues caused by inadequate testing include permission configuration errors, browser compatibility problems, file migration issues, performance problems, and workflow dead ends.

- Creating a Test Plan: A test plan should be collaborative and define the who, what, where, when, why, and how of the testing process.

- Who Should Test: Testing should involve representatives from each user role and technical team impacted by the new system.

- Types of Testing: Different types of testing include functional, usability, load/performance, migration, integration, user acceptance, recovery, and regression testing.

- Benefits of Testing: Effective testing mitigates risks, builds user confidence, and helps avoid costly mistakes post-deployment.

Testing for Success

In my 20 years of implementing SharePoint and Microsoft 365 based solutions I’ve spent a lot of time thinking about testing. Is testing necessary? What’s the most effective way to test? Could we have avoided a post-deployment issue with better testing? How do I engage people in testing?

Testing is hard work. It requires time and resources. You can develop and deploy a new SharePoint solution without any testing, but should you? Early in my career as an inexperienced consultant it was tempting to skip testing, or cut corners with the testing process, when we were under tight timelines and budget constraints.

At its core, testing confirms that the system has been built to specifications and that the specifications meet the business need. If we thoroughly gathered business requirements and were meticulous in the configuration and development process, testing shouldn’t be needed, right?

Experience has shown me how wrong I was. Our content management and collaboration systems are complicated, even though they’re built on robust, stable software platforms. Over the years I’ve had to troubleshoot and resolve a variety of production system issues that could have been avoided with a better testing methodology:

- Hundreds of users didn’t have access to critical business documents for two days because the permission configuration for their user role didn’t work as anticipated. Testing had been performed using the System Administrator user role.

- Users with one type of web browser were unable to access the Save button on a new web form. All testing had been done via a different web browser.

- Users reported a month after a major file migration, after the source system had already been retired, that some files seemed to be corrupted. Investigation determined they were migrated with the wrong file extension. Luckily, updating the file extension in the new system allowed the files to be viewable again so no data was lost.

- After migrating 800K records into a new system during the production deployment, users reported key pages and actions within the system were taking multiple minutes to load. The performance was unacceptable. We determined testing had been performed with much smaller data sets and the configuration didn’t scale effectively.

- A growing number of “in progress” workflows were building up in the system after launching a new business process, confusing participants because it was unclear how to complete the process and get it off their dashboard. We determined that one of the “paths” within the workflow reached a dead end without updating the workflow status. We hadn’t designed an outcome for that scenario.

If your new solution has issues, they WILL be found. Effective testing allows you to find and correct the issues before they cause business interruption, data loss, or loss of user and leadership confidence.

Test Plan

Testing starts with preparing a test plan. This is a collaborative activity with feedback from as many project team members as possible and can start as early as the beginning of the project. It defines the who, what, where, when, why, and how of testing for the project.

Who

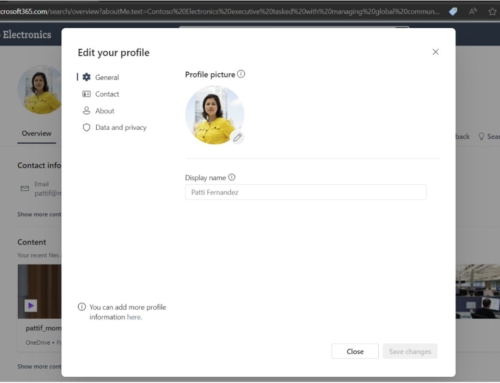

Who needs to test the system? Consider how permissions and tasks may differ from user to user and the discreet system components and integration points that require testing. At least one representative of each planned user role should be included, and a representative of each technical team or system that is impacted by the new system. Examples include: system administrators, content authors, content viewers, form submitters, workflow approvers, system integration developers, content migration consultants, desktop support technicians.

What

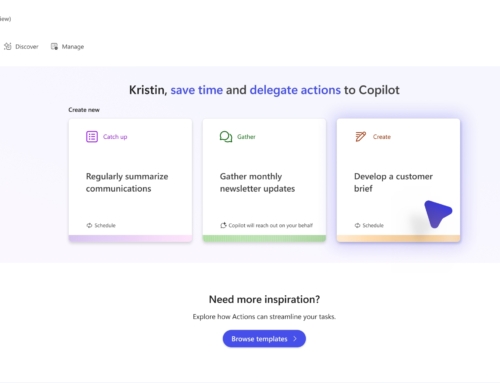

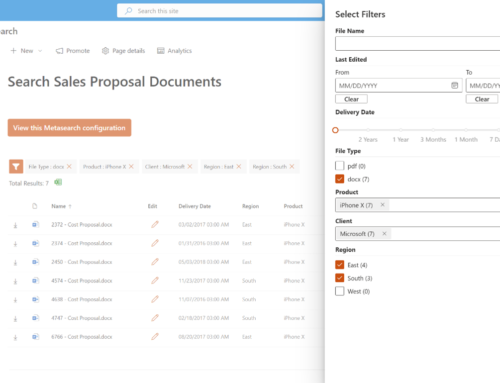

What needs to be tested? Start with the use cases that have been defined for the system. These may have been documented as “business requirements” and “technical requirements” or “user stories”. Each use case needs to be tested to confirm it’s working as designed and doesn’t cause unintended consequences. Examples of different types of testing include: functional testing, usability testing, load or performance testing, migration testing, integration testing, user acceptance testing, recovery testing, regression testing.

Where

Where will testing be performed? Some types of testing may require a dedicated “test” environment. Other types of testing may be done in the “production” environment, and then data can be reset before production deployment. A “test” environment might require additional servers or SaaS resources, user accounts, and desktops or virtual desktops that can be used to test alternative configurations without interrupting business operations. Include the creation of these test environments and configurations in your project plan. For example, document management systems frequently integrate with Microsoft Office installed on users computers. When we’re migrating a business from one DM system to another, the integrations can’t exist in parallel on the same computer. We’ll set up a computer lab or virtual desktops with the new Microsoft Office integration for testing and training.

When

In general, testing occurs before production deployment, and different types of testing may happen at different times. Analyze your project schedule and development activities to determine when testing will provide the most benefit and add the activities into the schedule. This will have an impact on your delivery timeline, but the delay will avoid costly mistakes after production deployment. Examples:

- Perform useability testing during the design and prototyping phase to get feedback on the UI/UX design as early as possible.

- Perform load and performance testing as soon as infrastructure is available to confirm it will support the anticipated user load and content storage.

- Perform content migration testing as soon as migration tools and scripts are prepared to confirm all content types migrate successfully.

- Perform functional and user acceptance testing after all system configurations and integrations are completed so the test system can be run in parallel to the existing system or process, testing with real-world scenarios.

- Developers and IT project team members should perform their testing before asking business users to test so that obvious bugs are found and resolved before they experience the system.

Why

Because testing is hard work and requires time and effort from many people, it’s important to clearly define why we’re testing. Many testers are not official project team members and are busy with their regular job duties, taking on the task of testing as a special request. What benefit do testers receive for putting in this hard work? Some “whys” I’ve communicated on projects include:

- Confirm for yourself and your team that you can continue to effectively do your job when the new system goes live.

- Get early access to the new system so you can give the development team feedback and help us shape the design.

- Help us protect your data as we move it to the new system.

- Get early access to the new system so you can get comfortable with it before it goes live, like an extended training period.

How

“How” we’re testing pulls the plan together and puts it in action. It defines the tools and procedures that will be used to conduct testing, addressing each role you’ve defined in the “Who” section of the plan and each type of testing defined in the “What” section of the plan. Example of tools and procedures you may include in the plan:

- Role-based test script: a document that describes the use cases, testing actions, and expected outcomes for a user role. For example, a “Content Viewer” test script instructs the user how to access the system, search for documents, and view documents and an “Integration developer” test script describes how to trigger and integration and verify the data was sent and received between systems successfully.

- Issue log: A list of issues and questions that occur during testing. Each entry should contain a title, description, submitter, assignee, priority, status, and resolution. Consolidating issues into a single list allows the project team to track all issues to resolution and create a list of resolutions for future support teams.

- Change request log: A list of design changes requested during testing, for discussion and approval either before production deployment, or in a future enhancement cycle.

- Testing meetings: Use meetings to perform interactive testing with user groups. Testing together in a meeting allows system developers to get real-time feedback from users and observe issues as they’re happening. We can also provide training while users are testing and efficiently test processes that require multiple users to complete, like document review and approval workflows.

- Parallel testing: this is a testing technique that uses real-world business scenarios and data instead of a test script. As staff members are completing a familiar task within the current system, they will switch over to the test system and repeat the same task with the same data.

Ultimately, preparing and executing a test plan mitigates risk and builds confidence with the future users of the new solution. If you do have issues after production deployment, consider if testing could have detected and solved the problem sooner. This allows you to continuously improve your testing methodology.

Compass365, a Microsoft Solutions Partner, delivers SharePoint, Microsoft 365, and Power Platform solutions that help IT and Business leaders improve the way their organizations operate and how their employees work.

Subscribe

Join over 5,000 business and IT professionals who receive our monthly newsletter with the latest Microsoft 365 tips, news, and updates.